Google Rankings Won't Save You If AI Assistants Can't Find Your Answers

GSC shows your Google rankings. But ChatGPT, Perplexity, and Gemini pull from different signals. Learn what AI assistants actually need to cite your content.

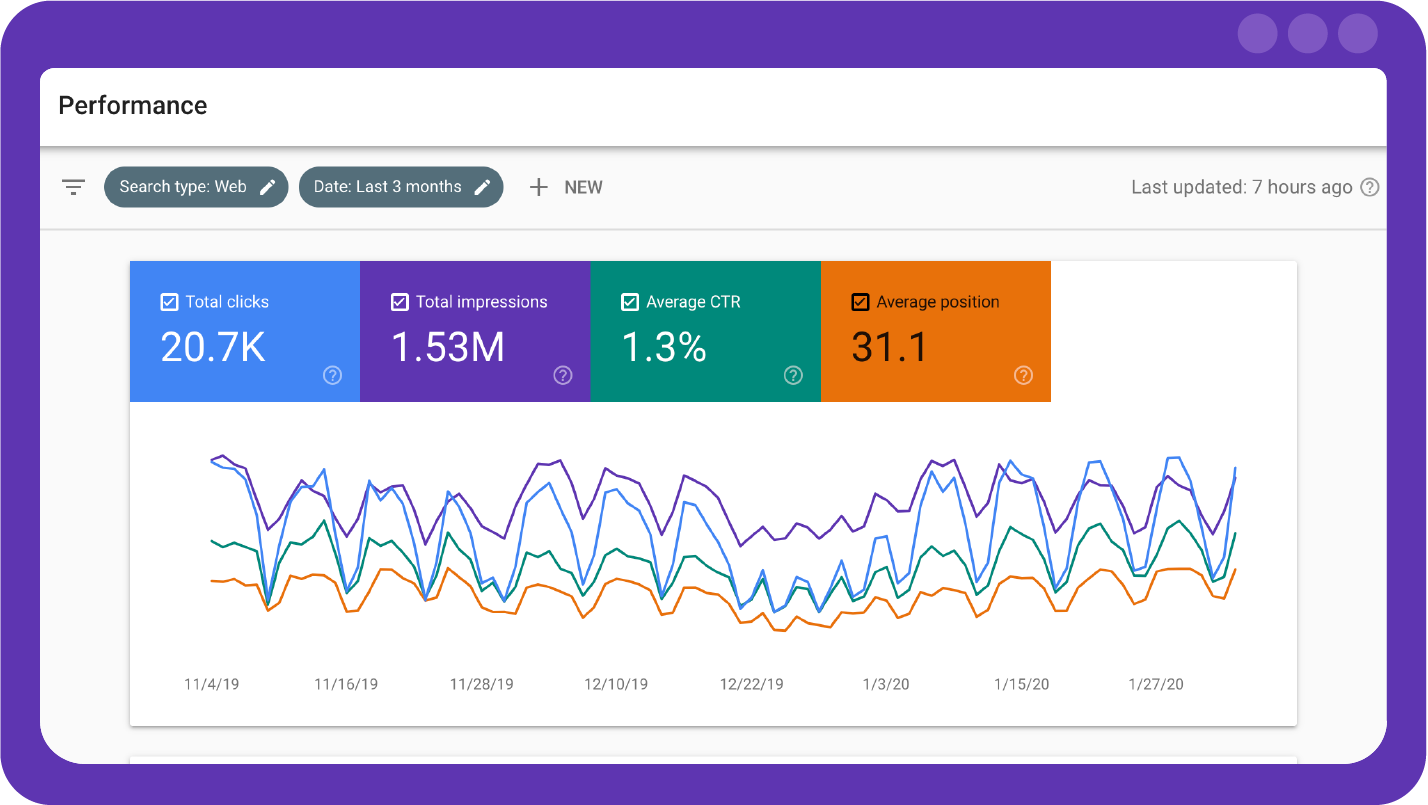

Google Search Console tells you where you rank. It shows which keywords drive traffic, which pages earn impressions, and where you're gaining or losing ground.

Useful? Absolutely. Complete? Not anymore.

Here's the shift: search is splitting into two distinct channels. Traditional search—where users type queries, scan results, and click links—still matters. But AI-powered search is growing fast. ChatGPT, Perplexity, Gemini, and Copilot now answer questions directly, pulling information from across the web and citing sources inline.

These AI assistants don't care about your position-seven ranking. They care about whether your content answers the question clearly, whether it's structured in a way they can parse, and whether it's fresh enough to trust.

A site can rank well on Google and remain invisible to AI assistants. The signals that drive one don't automatically drive the other.

This creates a new visibility equation. Keyword optimization still matters for traditional search. But AI visibility requires something more: content that's machine-readable, structurally explicit, and easy for language models to extract and cite.

Let's break down how these two worlds connect—and what changes when you optimize for both.

What GSC Reveals (And What It Doesn't)

Google Search Console remains essential for understanding traditional search performance. The data it surfaces helps you make smart decisions about content, keywords, and optimization priorities.

The "Search results" report shows queries driving organic traffic to your site. Sorting by clicks reveals your highest-value keywords—the terms that already generate visits and deserve ongoing monitoring. (Competitor Report: Method 1, "High-Value Keywords")

These high-value keywords need protection. Even small ranking drops on your top terms can cause significant traffic losses. Monthly monitoring lets you catch declines early and respond before problems compound.

GSC also exposes opportunity gaps. Keywords ranking in positions 11–30 represent "striking distance" terms—pages close to page one that need a push to break through. Research from Backlinko found that only about 0.63% of searchers click on second-page results. (Competitor Report: Method 3, citing Backlinko) Moving a single keyword from position 11 to position 9 can meaningfully change traffic.

Then there's click-through rate analysis. Position benchmarks help identify underperforming pages: position one averages roughly 39.8% CTR, position two about 18.7%, position three around 10.2%. (Competitor Report: Method 4, citing First Page Sage) A page ranking in position two with only 5% CTR signals a problem—perhaps a weak title tag, a missing rich snippet, or content that doesn't match search intent.

GSC data is powerful for diagnosing Google performance.

But here's the limitation: GSC shows you nothing about AI assistants.

ChatGPT doesn't report which of your pages it referenced. Perplexity doesn't send you an impressions count. Gemini doesn't log your "position" in its answers.

These systems pull information from the web, synthesize it, and cite sources—but they operate on entirely different logic than traditional search rankings. A page can rank position three for a high-volume keyword and never appear in an AI-generated answer. Another page might rank position 15 but get cited repeatedly because its content structure makes extraction easy.

GSC can't reveal this. It was built for a search world where rankings determined visibility. That world is expanding.

How AI Assistants Decide What to Cite

AI search works differently than traditional search.

When someone asks ChatGPT or Perplexity a question, the system doesn't return a ranked list of links. It generates an answer—synthesizing information from multiple sources, evaluating relevance, and citing pages that contributed to the response.

This changes what matters for visibility.

Traditional search rewards signals like backlinks, domain authority, and keyword optimization. These factors still influence whether your content gets indexed and appears in AI systems' knowledge bases. But the citation decision—whether an AI assistant references your specific page in its answer—depends on additional factors.

Content structure matters. AI systems parse web pages to extract information. Pages with clear headings, logical organization, and explicit statements are easier to process than walls of text. When your page directly answers a question in a parseable format, AI assistants can pull that answer cleanly.

Structured data matters. JSON-LD schema markup provides explicit signals about what your page contains. Article schema identifies authorship, publication dates, and topics. Product schema specifies prices, availability, and features. FAQ schema marks up question-answer pairs for easy extraction. This structured layer helps AI systems understand not just what your page says, but what it is.

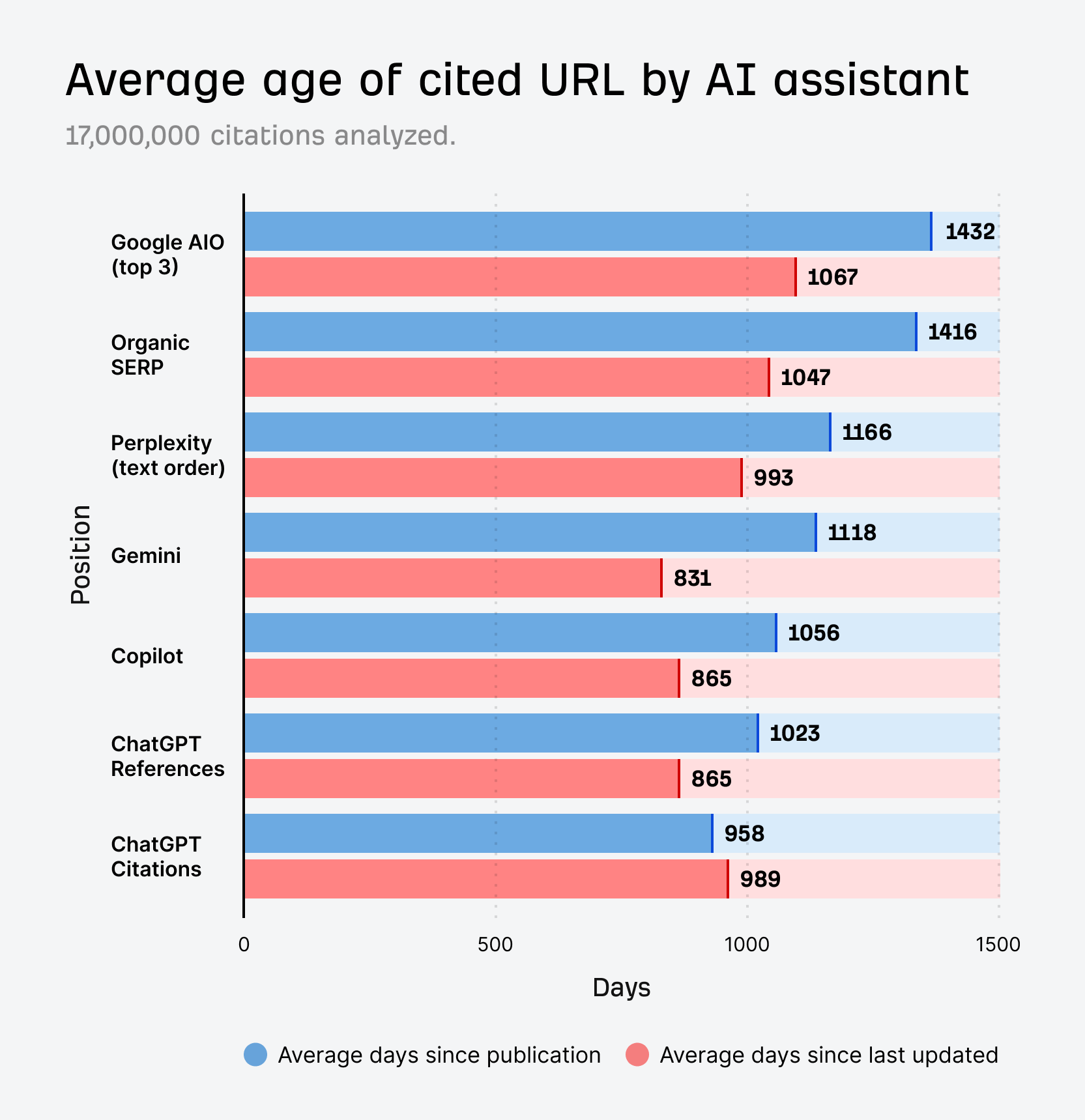

Freshness matters. Studies have found that AI-cited content tends to be significantly newer than average organic search results. ChatGPT in particular shows strong preference for recent content. (Related industry research on AI citation patterns) Outdated pages—even high-ranking ones—may be passed over in favor of fresher alternatives.

Answer completeness matters. AI systems favor content that directly addresses the query without requiring users to piece together partial information. Comprehensive coverage of a topic, including related subtopics and edge cases, increases citation likelihood.

None of this shows up in GSC. You can have excellent keyword rankings and poor AI visibility simultaneously. The metrics that tell you one don't predict the other.

The Two-Channel Visibility Framework

Think of modern search as two parallel channels, each requiring its own optimization approach.

Channel One: Traditional Search

This is where GSC data lives. Keywords, rankings, click-through rates, impressions. The optimization playbook here is well-established:

Identify high-value keywords and protect them through content freshness and competitive monitoring. Find striking distance opportunities and push them to page one through improved on-page SEO. Analyze CTR data to diagnose title tag and meta description problems. Discover related keywords to expand topical coverage. Filter for mobile-specific patterns to capture device-segmented search behavior.

The competitor report details these methods thoroughly. You sort GSC queries by clicks to find your most valuable terms. You filter for positions 11–30 to surface near-page-one opportunities. You compare CTR against position benchmarks to identify underperformers. You examine mobile-only data to uncover device-specific keyword patterns. (Competitor Report: Methods 1–5)

This channel still drives significant traffic. Optimizing for it remains essential.

Channel Two: AI Search

This is where traditional SEO data falls short. AI assistants use different inputs, different logic, and different success metrics.

Optimization here requires:

Structuring content so AI systems can parse it cleanly—clear headings, direct answers, logical flow. Implementing schema markup that explicitly defines content types, authorship, and relationships. Maintaining content freshness, since AI systems favor recent information. Ensuring technical accessibility, so AI crawlers can retrieve and process your pages without errors.

These two channels aren't mutually exclusive. Improvements in one often help the other. Fresh content with strong structure ranks better in Google and gets cited more by AI assistants. Valid schema markup can earn rich snippets in traditional search and improve machine readability for AI extraction.

But the channels aren't identical either. A page optimized purely for keyword density might rank well in Google while remaining difficult for AI systems to cite. A page with excellent structure but weak backlinks might get cited by ChatGPT despite modest search rankings.

Winning requires attention to both.

Connecting GSC Insights to AI Visibility

Here's where the two channels merge: GSC data can inform your AI visibility strategy, even though it doesn't directly measure AI performance.

High-value keywords signal topics worth structuring.

The terms already driving traffic to your site represent topics where you have authority. These pages deserve structured data investment. If you rank well for "project management software comparison," ensure that page includes Product schema, FAQ schema for common questions, and clear comparative tables that AI systems can extract.

Striking distance keywords reveal content gaps.

Pages ranking in positions 11–30 often lack the comprehensive coverage that top-ranking pages have. Expanding these pages to address more subtopics doesn't just help traditional rankings—it creates richer content for AI systems to cite. AI assistants prefer complete answers over partial ones.

Low-CTR pages may have structural problems.

When a page ranks well but earns fewer clicks than position benchmarks suggest, something's misaligned. Maybe the title doesn't match search intent. Maybe the meta description doesn't promise a clear answer. These same problems affect AI visibility: if your page doesn't clearly signal what it contains, AI systems may struggle to extract relevant information.

Related keywords indicate schema opportunities.

When you discover related keywords through research tools—variations and long-tail queries around your core terms—each represents a potential question that AI assistants might answer. (Competitor Report: Method 2, "Related Keywords") FAQ schema that addresses these variations creates explicit question-answer pairs that AI systems can cite directly.

Mobile keyword patterns reveal emerging queries.

Mobile searchers often use different phrasing—shorter queries, voice-search patterns, local intent. (Competitor Report: Method 5, "Mobile-Specific Opportunities") These patterns frequently align with how users query AI assistants through voice interfaces. Content that captures mobile keyword patterns may naturally align with conversational AI queries.

The insight here: GSC data helps you prioritize. It shows which pages matter most, which topics you own, and where opportunities exist. That prioritization applies to AI visibility optimization as much as traditional SEO.

Making Your Content AI-Ready

AI visibility isn't mysterious. It comes down to making your content easy for machines to read, extract, and cite.

Implement structured data systematically.

JSON-LD schema markup provides explicit signals that AI systems can process directly. At minimum, ensure:

- Article schema on blog posts and editorial content, specifying author, publication date, and modification date

- Product schema on product pages, including pricing, availability, and reviews

- Organization schema establishing your brand identity and relationships

- FAQ schema on pages that answer common questions

Invalid schema is worse than no schema—errors can cause entire markup blocks to be ignored. Validation matters.

Search OS handles this through automatic schema generation. Rather than manually coding JSON-LD for every page type, the platform generates valid structured data based on page content. This ensures comprehensive coverage without requiring technical resources for each implementation.

Structure content for extraction.

AI systems parse content to find answers. Help them by:

- Leading sections with direct statements before elaboration (the answer first, then the explanation)

- Using descriptive headings that signal section content

- Formatting lists as actual lists, not comma-separated text

- Including clear definitions for technical terms

- Summarizing key points explicitly rather than implying them

Maintain freshness signals.

Update timestamps matter. AI systems weigh recency, and content with recent modification dates gets preference. When you update content, ensure the modification date reflects the change.

But don't just change dates—make substantive updates. Add new statistics, refresh examples, address recent developments. Meaningful freshness signals genuine currency.

Ensure technical accessibility.

AI crawlers need to successfully retrieve your pages. Server errors, slow responses, and rendering failures all block access. Pages that load quickly and reliably for traditional search crawlers also perform better for AI system access.

Search OS provides full bot log visibility—the ability to see exactly which crawlers accessed which pages, with what results. This diagnostic layer reveals whether AI crawlers can actually retrieve your content.

The platform enables: 300× faster crawling. 0 crawl failures. In the same time window, bots ingest 300× more information. When technical access improves this dramatically, both traditional search crawlers and AI system retrievers benefit.

The Platform Question

Technical SEO complexity varies by platform. Custom-built sites offer full control but require manual implementation. Managed platforms—Shopify, Wix, Substack, and similar—handle basics automatically but constrain advanced optimization.

For AI visibility specifically:

Shopify provides solid product schema out of the box but limited customization for other schema types. Article schema, FAQ schema, and organizational markup typically require apps or custom code.

Wix has improved substantially but still limits access to raw HTML and server configurations. Implementing comprehensive structured data may require workarounds.

Substack prioritizes the reading experience over machine readability. Newsletter archives may lack structured data that helps AI systems understand content type, authorship, and dates.

WordPress varies wildly based on hosting, themes, and plugins. Some configurations support excellent structured data; others create fragmented implementations that don't validate.

Search OS addresses this through cross-platform applicability. Whether your site runs on Shopify, Wix, Substack, or custom infrastructure, the platform layers AI-ready optimization on top of existing architecture. Bot-first page structures ensure content is machine-readable regardless of underlying platform constraints.

This matters because most businesses can't switch platforms just to improve AI visibility. They need solutions that work with their current stack.

Continuous Visibility in Both Channels

Traditional SEO taught us that optimization is ongoing. Rankings shift, competitors improve, algorithms update. Monthly monitoring and regular content refreshes became standard practice.

AI visibility requires the same continuous attention—but with different signals to watch.

For traditional search, you monitor GSC metrics: clicks, impressions, CTR, average position. You track ranking changes weekly and investigate declines promptly. The competitor report recommends using the "Compare" feature in GSC to see before-and-after impact of changes. (Competitor Report: "Track Results in GSC")

For AI visibility, the signals are less centralized but equally important. Are AI crawlers accessing your pages successfully? Is your schema markup still valid after recent content updates? Are your pages being cited in AI-generated answers?

Search OS maintains a continuous optimization loop for both channels. The platform analyzes performance data, detects issues, implements fixes, and verifies results—not as a one-time audit but as ongoing operation.

When schema errors appear, they get flagged and corrected. When crawl patterns shift, the system adapts. When target pages aren't surfacing in either traditional search or AI results, the optimization loop continues until they reliably appear.

This automation is significant. Manual monitoring of technical SEO factors—crawl logs, schema validation, structured data coverage—consumes substantial time. Automating these processes achieves approximately 80% labor cost reduction while maintaining comprehensive coverage.

Measuring What Matters

For traditional search, GSC provides your measurement foundation. Clicks show actual traffic. Impressions indicate visibility. CTR reveals engagement. Position tracks ranking changes.

Layer additional tracking on top:

- Position tracking tools for daily ranking updates (GSC data can be delayed)

- Competitor monitoring to understand relative performance

- Historical data beyond GSC's 16-month window

For AI visibility, measurement is still evolving. Key signals to track:

AI citation monitoring. Some tools now track whether your pages appear in AI-generated answers. This is the AI equivalent of tracking rankings—direct measurement of visibility.

Schema validation coverage. What percentage of your pages include valid structured data? Are errors appearing that invalidate markup blocks? Regular validation catches problems before they affect visibility.

Freshness metrics. How recently were your key pages updated? Are modification dates accurate? Do content freshness signals align with AI systems' recency preferences?

Technical access success. Are crawlers—both traditional and AI—successfully retrieving your pages? Access failures create visibility failures.

The complete picture requires metrics from both channels. GSC tells you about traditional search. Crawl logs, schema audits, and citation tracking tell you about AI visibility. Together, they reveal your full search presence.

The New Optimization Workflow

Putting this together into an operational process:

Step 1: Identify priority pages from GSC data.

Start with keyword performance. Which pages drive the most traffic? Which keywords are your highest value? Which terms sit in striking distance? Which pages have CTR below position benchmarks?

This prioritization applies to both channels. Your most important pages for traditional search are also your most important pages for AI visibility.

Step 2: Audit structure and schema.

For each priority page, assess machine readability. Is structured data present and valid? Is content organized for extraction? Are headings descriptive? Do sections lead with clear answers?

Gaps here affect AI visibility even when traditional rankings are strong.

Step 3: Implement machine-readable optimization.

Add or fix schema markup. Restructure content for clarity. Update timestamps to reflect genuine freshness. Ensure technical accessibility for all crawlers.

Search OS automates much of this through automatic JSON-LD generation, bot-first page structures, and continuous validation.

Step 4: Monitor both channels.

Track traditional metrics in GSC. Track AI visibility through citation monitoring, schema audits, and crawl analysis. Use keyword and prompt prediction to anticipate emerging queries.

Step 5: Iterate continuously.

Optimization isn't a one-time project. Rankings change. AI systems evolve. New competitors emerge. A continuous loop—detecting issues, implementing fixes, verifying results—maintains visibility across both channels.

Two Channels, One Goal

Google Search Console shows you the traditional search world clearly. Keywords, rankings, clicks, impressions. This data remains crucial for understanding and improving organic performance.

But GSC can't show you the AI search world. ChatGPT, Perplexity, Gemini, Copilot—these systems operate on different signals. They favor structured content, explicit answers, fresh information, and machine-readable formats.

A site optimized only for traditional search risks invisibility in AI search. As AI assistants grow in usage, that invisibility becomes increasingly costly.

The solution isn't to abandon keyword optimization. It's to layer AI visibility optimization on top. Use GSC data to identify priority pages. Then ensure those pages are structured, schema-marked, fresh, and accessible for both traditional crawlers and AI systems.

Search OS enables this dual-channel approach. Bot-first pages that work across Shopify, Wix, Substack, and beyond. Automatic schema generation that maintains valid structured data. Full visibility into which systems can access your content. Continuous optimization that adapts as both traditional and AI search evolve.

Your keywords rank because your pages rank. Your AI visibility depends on whether AI systems can extract, understand, and cite your content.

Optimize for both. That's where search is going.