Why AI Search Ignores Most Web Content And How to Fix It

New research shows most pages never get cited by AI search—even from high-authority sites. Learn what determines AI visibility and how to close the gap.

Here's an uncomfortable truth: even if you publish quality content on a high-authority domain, AI search platforms will probably ignore it.

Recent experiments tracking AI citations over 30 days found that the majority of pages—published under ideal conditions—never became sources for AI-generated answers. Not once.

This isn't about content quality. It's about whether AI systems can efficiently access, parse, and trust your information.

The question isn't just "Will my content rank?" anymore. It's "Can AI agents actually read my pages?"

The Citation Gap Problem

A study tracking 81 test pages published on a domain with an Authority Score of 84 revealed something troubling: despite strong overall SEO performance and topical relevance, a significant portion of pages never surfaced as cited sources in AI search results.

The pages were FAQ-style content covering AI optimization and SEO topics—exactly the type of content AI platforms should find useful. They were tracked across both Google's AI Mode and ChatGPT search over a 30-day period.

The results:

- ChatGPT search peaked at 42% citation coverage

- Many pages received zero citations throughout the entire observation period

At peak citation rates, only 59% of pages were ever cited by Google AI Mode

This means roughly 4 in 10 pages—on a high-authority site, covering relevant topics, with strong SEO fundamentals—were invisible to AI search.

Why?

The answer lies in how AI platforms process and select information sources. And it reveals a gap that traditional SEO metrics don't capture.

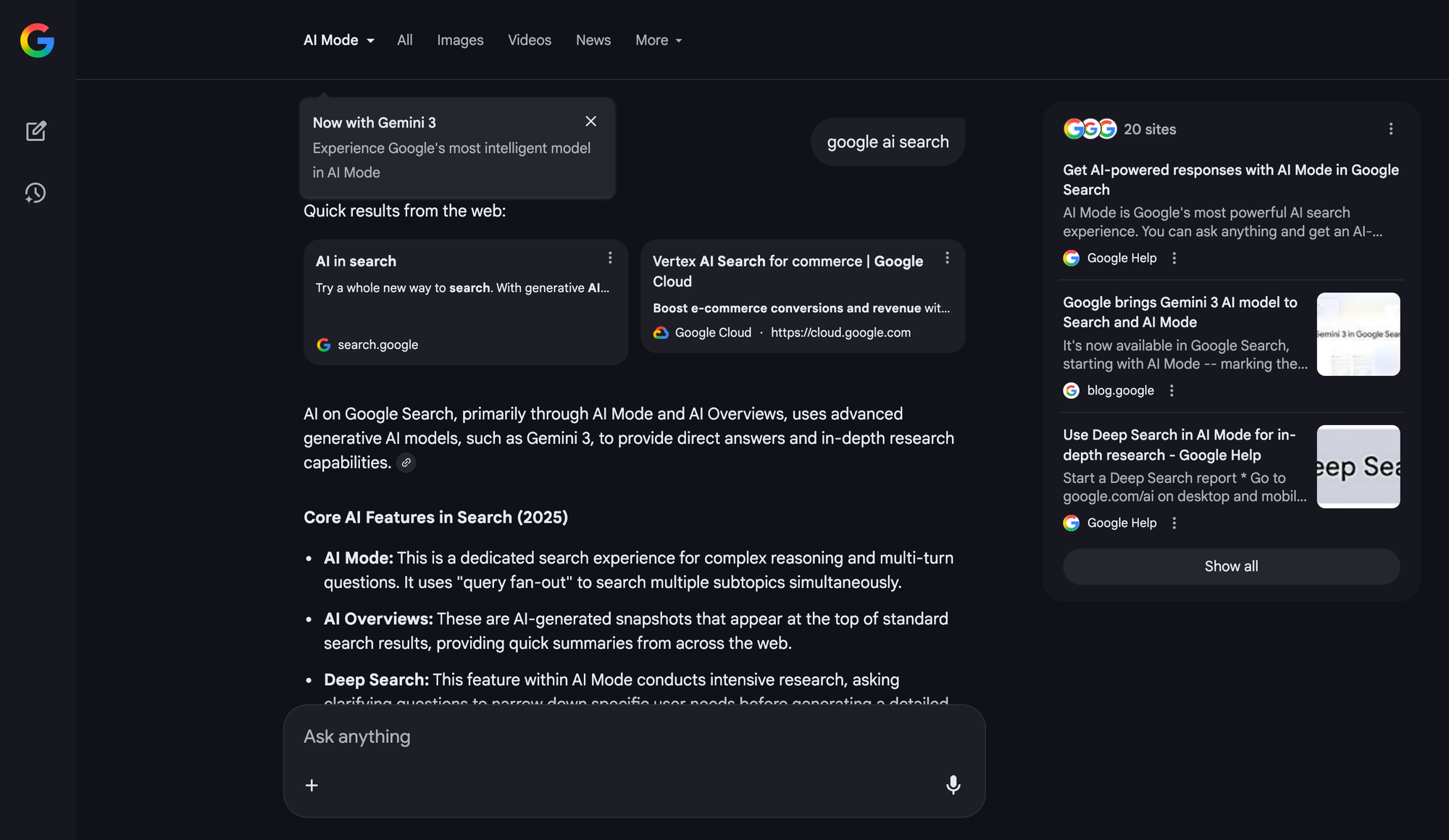

Two Platforms, Two Completely Different Behaviors

The study uncovered something else worth examining: Google AI Mode and ChatGPT search behave in fundamentally different ways when selecting sources.

Google AI Mode: Fast but Volatile

Google's AI Mode picks up content quickly. Within 24 hours of publication, 36% of the test pages were already being cited. By day six, that number climbed to 59%.

But here's the catch: those citations didn't last.

Google AI Mode continuously re-evaluates which sources to cite. Pages that appeared as sources one day disappeared the next. By day 30, only 26% of pages were still being cited—a drop of more than 50% from peak visibility.

The pattern suggests Google's AI Mode treats source selection as an ongoing experiment. It samples quickly, then refines ruthlessly.

ChatGPT Search: Slow but Stable

ChatGPT search showed the opposite behavior.

On day one, only 10% of pages were cited—roughly three times slower than Google AI Mode. Growth was gradual: 17% by day seven, 35% by day 14, and 42% by day 30.

But once ChatGPT cited a page, that citation tended to stick. The platform added new sources over time without dropping existing ones at the same rate.

This creates a different optimization challenge. With ChatGPT, the question isn't "How do I stay visible?" but "How do I get discovered in the first place?"

What This Reveals About Machine Readability

These divergent patterns point to something deeper than algorithmic preference. They suggest that the structural accessibility of your content—how easily AI systems can extract, validate, and trust your information—plays a decisive role in citation outcomes.

Consider what makes a page easy for AI to process:

- Clear semantic structure: Headings, lists, and logical information hierarchy that machines can parse without guessing

- Explicit entity relationships: Named concepts connected through structured data, not buried in prose

- Consistent formatting: Predictable patterns that reduce parsing errors

- Machine-readable metadata: Schema markup that tells AI systems what your content is about before they read it

Pages lacking these elements force AI systems to work harder. And when AI platforms evaluate thousands of potential sources, the ones that require extra processing often get skipped.

This explains why domain authority alone doesn't guarantee AI visibility. A page can rank well in traditional search while remaining structurally opaque to AI agents.

The Speed Factor: Why First Impressions Matter

The study's timeline data reveals another insight: initial visibility creates momentum.

Pages that were cited early by Google AI Mode had a higher chance of remaining in the citation pool, even as overall numbers declined. Conversely, pages that weren't cited in the first week rarely appeared later.

For ChatGPT search, the relationship was different but equally important. Pages that gained citations in the first two weeks were more likely to accumulate additional citations over time.

This suggests that AI platforms form early judgments about source reliability. Once a page is classified as useful (or not), that classification tends to persist.

The implication: you can't afford to wait and see. Your content needs to be AI-ready from the moment it goes live.

Why Traditional SEO Doesn't Solve This

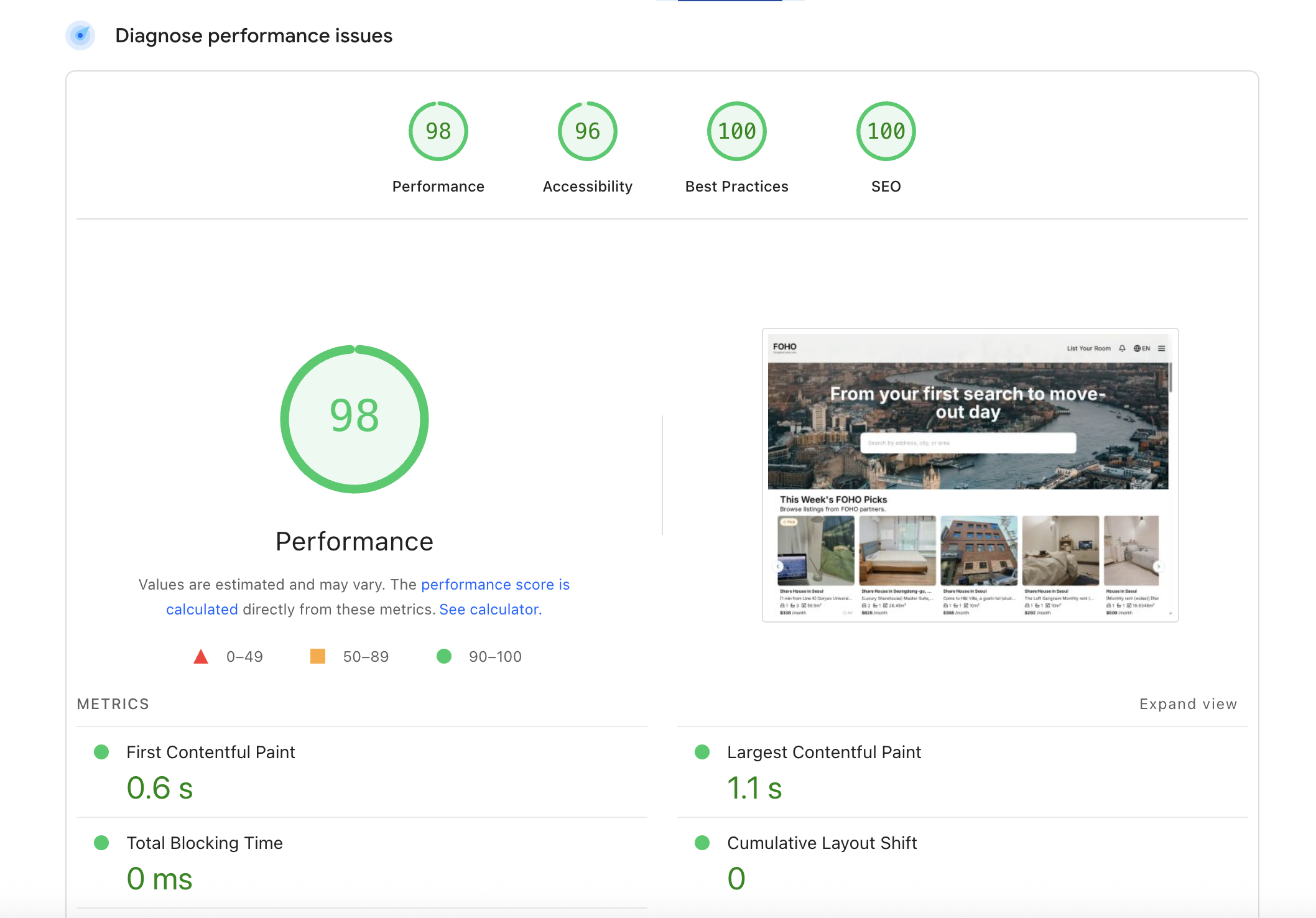

Traditional SEO focuses on signals that help search engines understand relevance and authority: backlinks, keyword optimization, page speed, mobile responsiveness.

These factors still matter. But they don't directly address the machine readability problem.

A page can:

- Rank on page one of Google

- Have excellent Core Web Vitals

- Earn dozens of quality backlinks

- Still be structurally difficult for AI agents to process

The gap exists because traditional search engines and AI platforms parse content differently.

Search engines index pages and match them to queries based on relevance signals. AI platforms need to extract specific information from pages, synthesize it with other sources, and generate coherent answers.

The second task is harder. It requires not just finding relevant pages, but understanding what those pages actually say—in a format that can be programmatically processed.

The Structural Solution

Closing the AI visibility gap requires addressing the root cause: making your content machine-readable at a structural level.

This means:

1. Bot-First Page Architecture

Design pages so that the most important information is immediately accessible to crawlers and AI agents. This isn't about hiding content from humans—it's about ensuring machines don't miss what matters.

Bot-first pages present core information in predictable locations with consistent formatting. They minimize the parsing work AI systems need to do.

2. Automatic Schema Generation

Structured data (JSON-LD) provides explicit semantic context that AI systems can process without ambiguity.

Instead of inferring that a page is a "product review" from contextual clues, schema markup declares it directly. This reduces processing errors and increases citation confidence.

The challenge: manually maintaining schema across a large site is unsustainable. Changes to content require corresponding schema updates. Inconsistencies creep in. Coverage gaps appear.

Automatic schema generation solves this by programmatically creating and updating structured data as content changes.

3. Full Bot Log Visibility

You can't optimize what you can't see.

Most site owners have no idea how AI agents actually interact with their pages. They see aggregate traffic numbers but not individual bot behaviors, crawl patterns, or processing failures.

Full bot log visibility means inspecting every interaction: which pages bots request, how they parse content, where they encounter errors, and what information they extract.

This transforms AI optimization from guesswork into evidence-based iteration.

4. Continuous Optimization Loops

AI citation patterns aren't static. The study showed Google AI Mode changing its source selection daily. ChatGPT search gradually expanded its citation pool over weeks.

Effective AI visibility requires continuous monitoring and adjustment—not one-time optimization.

This means:

- Tracking citation changes across platforms

- Identifying which structural changes correlate with visibility gains

- Iterating until target pages reliably surface

What Search OS Delivers

Search OS addresses these structural challenges systematically.

The platform creates bot-first pages that AI agents can read efficiently. It generates JSON-LD schema automatically across content types and platforms—including Shopify, Wix, and Substack.

Full bot log visibility lets you inspect every crawler interaction. You can see exactly how AI agents process your pages and identify specific barriers to citation.

The system uses bot-log-driven analysis to diagnose structural problems and prioritize fixes. It predicts which keywords and prompts are most likely to trigger AI citations, so you can optimize proactively.

And it runs continuous optimization loops until target pages reliably surface—without promising guaranteed rankings, because AI citation isn't a ranking game.

The measured results:

- 300× faster crawling.

- 0 crawl failures.

- In the same time window, bots ingest 300× more information.

- 80% labor cost reduction.

These aren't vanity metrics. They represent the structural improvements that determine whether AI platforms can access your content at all.

The Platform Dimension

The study tracked citations on a single high-authority domain. But most businesses operate across multiple platforms—each with different structural constraints.

Shopify stores have different page architectures than WordPress blogs. Wix sites handle structured data differently than custom-built applications. Substack newsletters present unique challenges for AI discoverability.

This platform fragmentation compounds the machine readability problem. A solution that works on one platform may fail on another.

Search OS provides cross-platform applicability, ensuring consistent bot-first architecture and schema generation regardless of where your content lives.

Rethinking the Visibility Timeline

The 30-day citation study offers a useful framework for expectations.

For Google AI Mode:

- Initial visibility can happen within 24-48 hours

- Peak citation rates typically occur within the first week

- Maintaining visibility requires ongoing structural optimization

- Expect volatility—citation status can change daily

For ChatGPT search:

- Initial citations may take 1-2 weeks to appear

- Growth is gradual but more stable

- Once cited, pages tend to retain visibility

- The primary challenge is initial discovery, not maintenance

These timelines assume your content is already structurally accessible. Pages with machine readability problems may never enter the citation pool at all.

What to Do Now

If your content isn't appearing in AI search results, the problem probably isn't quality or authority. It's structure.

Start by asking:

- Can AI agents actually parse my pages? Not "do they load fast" or "are they mobile-friendly"—but can machines extract specific information from them reliably?

- Is my structured data complete and consistent? Partial schema coverage or outdated markup creates confusion. AI systems may ignore pages they can't confidently classify.

- Do I know how bots interact with my site? If you can't see bot behavior, you're optimizing blind.

- Am I iterating based on evidence? One-time optimization doesn't work for AI visibility. You need continuous feedback loops.

The pages that surface in AI search aren't necessarily the best-written or most authoritative. They're the ones AI systems can process efficiently and cite confidently.

That's a structural problem. And structural problems require structural solutions.

Key Takeaways

- Most web content never gets cited by AI search platforms, even from high-authority domains

- Google AI Mode cites quickly but drops sources frequently; ChatGPT search is slower but more stable

- Traditional SEO metrics (rankings, backlinks, page speed) don't predict AI visibility

- Machine readability—how easily AI can parse and extract information—determines citation outcomes

- Bot-first architecture, automatic schema, full log visibility, and continuous optimization loops close the AI visibility gap

- Search OS delivers these capabilities with measured results: 300× faster crawling, 0 crawl failures, 300× more information ingestion, and 80% labor cost reduction