AI Search Visibility in 2026: The Technical Gap Most Marketers Miss

AI search cites content—not pages. Learn why structured, bot-readable formats matter more than rankings, and how to make AI agents actually understand your site.

Most marketers already know that AI search is changing the game. Fewer know why their content still isn't showing up.

The conversation around AI visibility tends to focus on content quality, freshness, and authority. These factors matter. But they assume something more fundamental: that AI systems can actually access and interpret your pages in the first place.

Here's the uncomfortable reality. AI-driven search engines don't rank pages the way Google traditionally has. They synthesize answers from multiple sources, extracting relevant fragments and stitching them into a single response. If your content isn't structured for extraction, it doesn't matter how well-written it is. AI systems will skip over it entirely—or worse, cite a competitor who formatted the same information more clearly.

This article breaks down what's actually happening in AI search, where the technical gaps emerge, and what operational changes make content citable in generative environments.

AI Search Doesn't Rank Pages—It Cites Fragments

Traditional search engines index pages and rank them based on authority, relevance, and hundreds of other signals. Users scroll through a list of links and choose where to click.

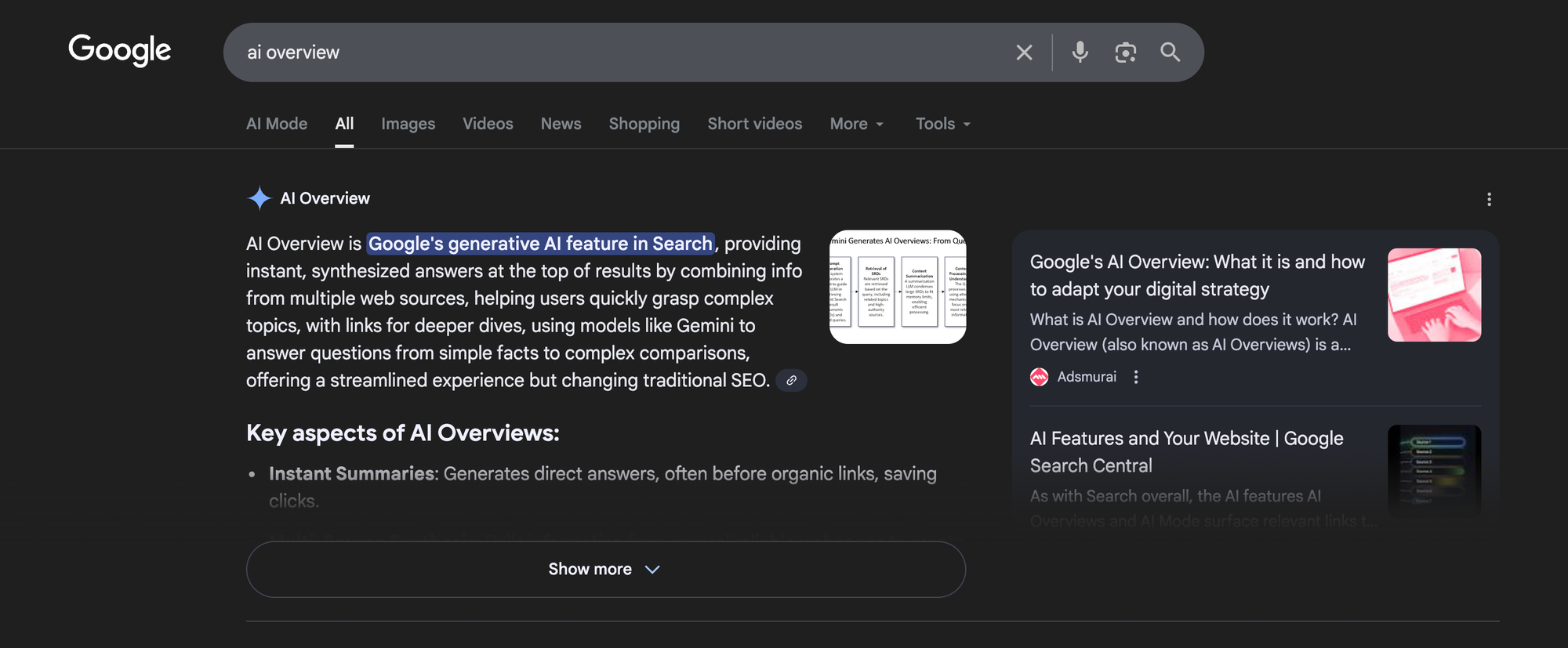

AI-powered search works differently. Systems like Google's AI Overviews, Perplexity, and ChatGPT function more like editors than librarians. They interpret a query, retrieve information from multiple sources, and compose a single synthesized answer.

The shift changes what success looks like.

In traditional search, the goal was ranking. Position one meant maximum visibility. In AI search, the goal is citation. Your content needs to be referenced, quoted, or mentioned within the generated response. A page ranking fifth organically might still appear as the primary cited source in an AI Overview if its content is more extractable than the top result.

This distinction matters because it reframes the entire optimization challenge. Ranking factors like backlinks and domain authority still influence whether AI systems trust your content. But a new variable has entered the equation: can the AI actually parse what you've written?

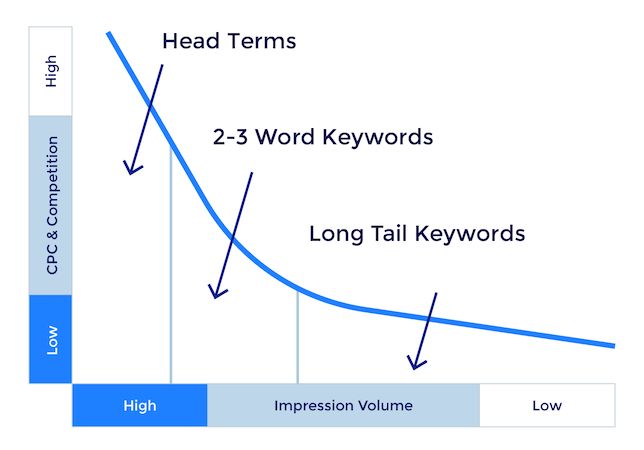

Why Queries Are Getting Longer (And What That Signals)

User behavior is evolving alongside AI capabilities. When people know they'll get a direct answer instead of a list of links, they ask more complex questions.

Instead of fragmented searches like "CRM software pricing," users now enter full queries: "What's the best CRM for a 50-person marketing agency with Salesforce integration that costs under $150 per user monthly?"

This shift has two implications for content strategy.

First, keyword targeting gives way to intent coverage. Optimizing for a single phrase matters less than structuring content to address specific, scenario-based questions. AI systems pull from pages that answer the actual question—not pages that merely contain the right keywords.

Second, longer queries demand more precise content organization. AI models scan for sections that directly address the user's stated need. Pages with clear subheadings, self-contained paragraphs, and explicit answers to implied questions become more valuable.

The operational takeaway is straightforward: structure content so that any individual section could function as a standalone answer. If a user asks a question that matches your H2, the AI should be able to extract your first paragraph under that heading and present it as a complete thought.

The Visual and Structural Expansion of Search

AI search isn't limited to text anymore. Google Lens alone processes over 12 billion visual searches each month.

Users can now upload images alongside text prompts and receive AI-generated explanations. A photo of a plant combined with "Is this safe for dogs?" returns a synthesized answer drawn from veterinary sources, botanical databases, and community forums.

This multimodal capability means accessibility features are no longer optional. Descriptive alt text, video transcripts, and image captions serve a dual purpose: they help users with disabilities, and they help AI systems interpret non-text content.

Beyond inputs, AI search is also changing how information is displayed. Responses increasingly organize multi-source insights into tables, comparison lists, and step-by-step breakdowns. The format mimics a tutorial more than a search result.

What this means for content creators: AI systems can't synthesize what they can't segment. If your content is a wall of undifferentiated prose, it won't get extracted into the structured formats AI Overviews prefer. Clear organization isn't just a user experience improvement—it's a technical requirement for visibility.

Generational Adoption Is Accelerating the Shift

Not everyone uses AI search equally. Adoption patterns show a clear generational divide.

Among U.S. adults under 30, 58% have used ChatGPT. That's nearly double the rate of adults over 30. Meanwhile, roughly 31% of Gen Z users report starting searches directly on AI platforms or chatbots—compared to about 20% of the general population.

These numbers signal where search behavior is heading. Younger users already treat AI assistants as default research tools, not novelties. As this cohort ages into higher purchasing power, brands that aren't visible in AI environments will lose access to an entire demographic.

The practical implication: don't treat AI search as a secondary channel. For brands targeting younger audiences, it's increasingly the primary one.

Click-Through Rates Are Falling—But Visibility Still Matters

Here's a number that should concern anyone relying on organic traffic: when AI summaries appear, click-through rates drop significantly.

Research shows CTR fell by 15.5% across queries that trigger AI Overviews. Separate data indicates clicks are nearly twice as high when no AI summary appears—15% compared to 8%. And only 1% of users click links displayed inside AI summaries themselves.

This creates an uncomfortable paradox. AI Overviews reduce traffic to source websites, but being cited in those Overviews is still better than not appearing at all. The brand mentioned in an AI-generated answer gains exposure and trust even if users don't click through.

The measurement framework needs to evolve. Traffic and conversions still matter, but they're no longer sufficient proxies for visibility. Citation frequency, brand mentions in AI responses, and on-SERP presence are becoming equally important metrics.

AI Overviews Are Expanding Fast

The scope of AI-generated answers is growing. AI Overviews appeared for 6.49% of searches in January 2025. By March 2025, that number had doubled to 13.1%. Certain query types trigger Overviews more frequently:

- Complex or multi-part questions ("how to combine," "compare," "best way to")

- Instructional and product comparison searches

- Current events and information-dense topics

When Overviews appear, they typically cite between three and eight sources. Those sources share common characteristics: clear writing that answers questions directly, self-contained sections focused on single topics, and concrete data when relevant.

This pattern reinforces a core principle. AI systems favor content that's easy to extract and attribute. Pages that bury answers in lengthy preambles or spread key information across multiple sections are less likely to be cited.

The Bot Readability Problem Nobody Talks About

Here's where most optimization advice falls short. It assumes AI crawlers can already access and parse your content. That assumption is often wrong.

Before an AI system can cite your page, its crawler needs to successfully retrieve the content, render any dynamic elements, and interpret the structure. If any step in that process fails, your content is invisible to AI search—regardless of how good it is.

Common failure points include:

Rendering issues. JavaScript-heavy pages may not fully render for all bots. Content loaded dynamically after page load might never be seen by crawlers that don't execute scripts.

Structural ambiguity. Without proper markup, AI systems can't distinguish between main content and boilerplate elements like navigation, footers, or sidebars.

Schema gaps. Structured data formats like JSON-LD help AI systems understand what a page is about and how its information relates to user queries. Missing or incorrect schema reduces the likelihood of citation.

Crawl inefficiency. If bots encounter errors, slow load times, or blocked resources, they may abandon the crawl before indexing your content.

These technical failures create a visibility ceiling that no amount of content quality can overcome. The first question isn't "Is my content good enough to be cited?" It's "Can AI crawlers actually read my content?"

What Machine-Readable Content Actually Looks Like

Creating content for AI visibility requires thinking about how machines process information—not just how humans read it.

Start with structural clarity. Each section should address one specific topic or question. Use headings that signal content accurately. Avoid clever or ambiguous titles that might confuse automated systems.

Lead with answers. AI systems often extract the first sentence or two under a heading as the "answer" to a query. Don't build up to your point—state it immediately, then provide supporting detail.

Use consistent formatting. Tables, numbered lists, and definition structures help AI systems identify extractable information. A comparison formatted as prose is harder to parse than one formatted as a table.

Implement comprehensive schema markup. JSON-LD structured data tells AI systems what type of content they're looking at—article, product, FAQ, how-to guide—and provides metadata that aids interpretation. Schema types like FAQ, How-to, and Article markup may support inclusion in AI Overviews.

Ensure transcripts and alt text are complete. Any non-text content should have a text equivalent. This includes images, videos, audio files, and embedded elements.

The goal is reducing friction between your content and the AI systems trying to understand it. Every structural improvement makes extraction easier.

The Operational Framework: Building AI Visibility Systematically

Improving AI visibility isn't a one-time project. It requires ongoing diagnosis, optimization, and measurement. Here's a structured approach.

Phase One: Audit Your Current Bot Accessibility (Weeks 1-4)

Before optimizing content, confirm that AI systems can actually crawl and interpret your site.

Run a technical audit focused on:

- Crawlability across major bot user agents

- JavaScript rendering for content loaded dynamically

- Structured data implementation and validation

- Page speed and mobile performance

- Blocked resources in robots.txt or meta directives

Identify which pages already appear in AI Overviews or other AI-driven summaries. This establishes a baseline and reveals what's working.

Search OS approaches this through full bot log visibility, allowing teams to inspect all bot logs and see exactly how crawlers interact with their pages. When bot behavior is visible, diagnostic work becomes precise rather than speculative.

Phase Two: Restructure Content for Extraction (Weeks 5-8)

With technical access confirmed, focus on content structure.

Prioritize pages with existing traffic or ranking potential. For each page:

- Reorganize sections around specific questions or topics

- Add or refine headings to signal content accurately

- Lead each section with a direct answer

- Convert appropriate content into tables, lists, or FAQ formats

- Implement or update schema markup

Search OS automates schema generation through automatic JSON-LD creation, eliminating manual markup work and ensuring structured data stays consistent across page updates.

Incorporate visual and multimedia elements with proper accessibility features. Update outdated statistics and refresh stale examples. AI models prioritize recent, credible information.

Phase Three: Optimize Delivery and Monitor Performance (Weeks 9-12)

Refine technical performance to ensure reliable bot access.

Key areas:

- Compress images and minimize render-blocking resources

- Simplify layouts and ensure mobile responsiveness

- Validate structured data through testing tools

- Confirm bot access across platforms (sites built on Shopify, Wix, Substack, and similar platforms often have platform-specific crawl considerations)

Search OS provides cross-platform support, ensuring bot-readable structures work consistently regardless of the underlying CMS or website builder.

Ongoing: Measure and Iterate

AI search visibility fluctuates as models and algorithms evolve. Continuous monitoring is essential.

Track:

- Citation frequency across AI platforms

- Brand mentions in AI-generated answers

- Referral traffic from AI-affected queries

- Changes in traditional search impressions and CTR

Adjust content and technical implementation based on what the data shows. The brands that iterate consistently will maintain visibility as AI search matures.

The Search OS Approach: Bot-First Infrastructure

Most AI visibility advice focuses on content—what to write, how to structure it, when to update it. That advice is necessary but insufficient.

The deeper challenge is infrastructure. Can AI crawlers access your content reliably? Can they parse its structure correctly? Do they encounter errors, delays, or ambiguities that cause them to abandon the crawl?

Search OS addresses this through bot-first pages and structures specifically designed for AI agent readability. Instead of retrofitting human-focused pages for machine consumption, it builds machine-readable outputs from the ground up.

The results are measurable:

- 300× faster crawling.

- 0 crawl failures.

- In the same time window, bots ingest 300× more information.

- 80% labor cost reduction.

This approach combines automatic schema generation with bot-log-driven analysis. By examining exactly how crawlers interact with pages—what they access, what they skip, where they fail—teams can diagnose visibility problems with precision and verify fixes in real time.

The system operates as a continuous optimization loop. Content is structured for extraction, bot behavior is monitored, issues are identified and resolved, and visibility is tracked across AI platforms. The cycle repeats until target pages reliably surface in AI search environments.

Search OS also provides keyword and prompt prediction capabilities, helping teams anticipate how users will phrase queries in conversational AI contexts and structure content accordingly.

Common Misconceptions About AI Search Optimization

Several myths persist around AI visibility. Clearing them up prevents wasted effort.

Misconception: "High rankings automatically mean AI visibility."

Reality: AI systems cite content based on extractability and relevance to the specific query, not just domain authority or ranking position. A page ranking fifth can be cited over a page ranking first if its content is more clearly structured.

Misconception: "Schema markup guarantees inclusion in AI Overviews."

Reality: Structured data supports AI interpretation but doesn't guarantee citation. Google's documentation notes that schema types like FAQ and How-to markup may support inclusion—but they aren't sufficient on their own. Clear, self-contained content matters more.

Misconception: "AI search will eliminate the need for traditional SEO."

Reality: AI systems still rely on traditional search infrastructure for crawling and indexing. Backlinks, domain authority, and technical SEO remain relevant. AI visibility adds new requirements; it doesn't replace existing ones.

Misconception: "Only text content matters for AI search."

Reality: Multimodal AI systems process images, video, and audio. Content with complete transcripts, alt text, and captions has more surfaces for citation than text-only pages.

What Happens If You Wait

The shift toward AI search is accelerating. AI Overviews doubled their coverage in two months. Younger users are already defaulting to AI platforms for research. Click-through rates on traditional results are declining.

Brands that delay optimization face compounding disadvantage. As AI systems become more central to how users find information, the content that's structured for extraction will capture disproportionate visibility. Content that isn't will fade from view—not because it's low quality, but because machines can't read it.

The window for establishing AI visibility while competition is relatively low won't stay open indefinitely.

Key Takeaways

- AI search cites content, not pages. Success means being referenced in synthesized answers, not just ranking in a list of links.

- Longer, more complex queries demand clearer structure. Content needs to address specific scenarios with direct, extractable answers.

- Bot accessibility is a prerequisite. Before content quality matters, AI crawlers need to successfully access and parse your pages.

- Structured data aids interpretation. JSON-LD schema markup helps AI systems understand content type and context.

- Measurement must evolve. Citation frequency and AI mentions matter alongside traditional traffic metrics.

- Technical infrastructure determines the ceiling. No amount of content optimization overcomes fundamental crawl failures or rendering issues.

- Continuous iteration is required. AI search algorithms evolve constantly. Visibility maintenance requires ongoing monitoring and adjustment.

Stop guessing. Start seeing.

Search OS shows you exactly how AI crawlers interact with your pages—what they read, what they miss, and why. With automatic JSON-LD generation, bot-first page structures, and full log visibility, you can diagnose visibility gaps and fix them before your competitors do.