Why AI Search and Google Both Depend on One Thing You're Probably Ignoring

SEO gets you ranked. GEO gets you cited by AI. But both fail if bots can't read your content. Learn how to fix the machine-readability gap most marketers miss.

Before Rankings, Before AI Citations: The Bot Readability Problem

Search optimization is splitting into two disciplines.

SEO helps your pages rank in Google's results. Generative engine optimization—GEO—helps your content appear in AI-generated answers from ChatGPT, Perplexity, and Google's AI Overviews.

Most guides treat these as separate problems requiring separate strategies. They're missing something fundamental.

Both SEO and GEO assume the same thing: that crawlers and AI agents can actually read your content. That assumption fails more often than marketers realize.

This article explains what happens when bots visit your site, why traditional optimization skips the machine-readability layer, and how to build technical foundations that serve both search engines and AI assistants.

The Rise of AI Answers (And Why It Changes Everything)

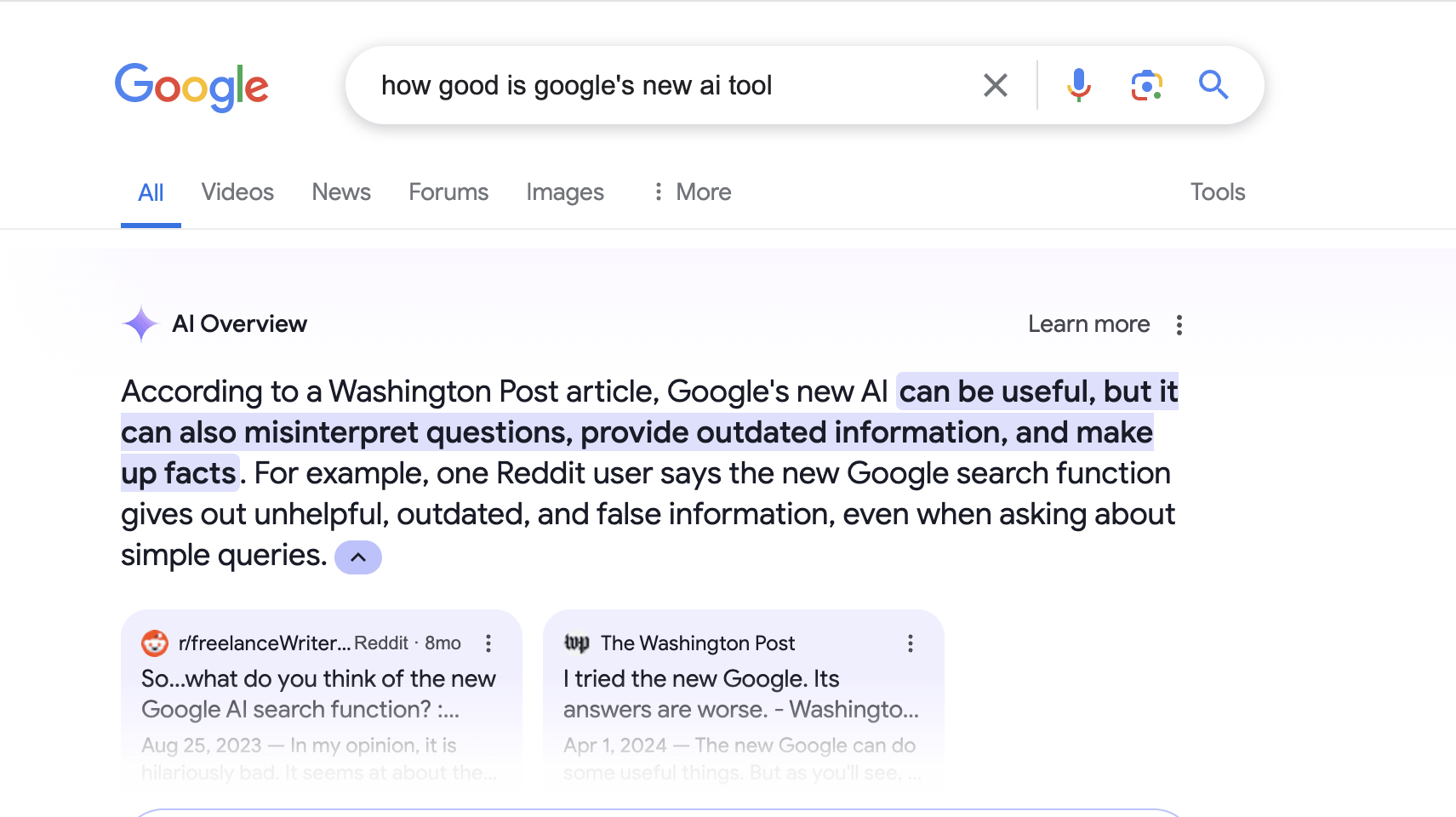

AI-generated answers are no longer experimental. They're mainstream.

As of March 2025, 13.14% of all search queries triggered Google's AI Overviews—up from 6.49% just two months earlier.

The shift in user behavior is equally dramatic. Research from Bain & Company found that 80% of users now answer 40% of their queries without clicking any link at all.

This creates a fundamental change in how visibility works:

- Traditional SEO gets your content listed in search results

- GEO gets your content recommended inside AI answers

The difference matters. When someone searches "best CRM tools" on Google, they see a ranked list of options. When they ask ChatGPT the same question, they get a synthesized recommendation—sometimes with your brand mentioned, sometimes without.

Each AI platform approaches this differently. ChatGPT synthesizes answers without always showing sources. Perplexity is citation-driven and highlights frequently linked pages. Google AI Overviews prefer Google-indexed content. Gemini follows Google ranking patterns but provides less structured results.

If your content isn't structured for these systems to parse, summarize, and cite—you're invisible in a growing share of search behavior.

What Actually Happens When Bots Visit Your Site

Here's what most marketers don't see: the gap between what humans read and what machines receive.

When a person visits your product page, they see formatted text, images, navigation, and calls to action. When a crawler or AI agent visits the same page, they receive raw HTML, JavaScript execution results (maybe), and whatever structured data you've provided (if any).

The problems start here:

JavaScript-dependent content. Many modern sites render key content through JavaScript. Google's crawler handles this reasonably well—but not perfectly. AI agents and other crawlers often don't execute JavaScript at all. If your pricing, product descriptions, or core messaging loads dynamically, those bots see empty containers.

Missing or malformed schema. Schema markup (JSON-LD) tells machines what your content means—not just what words appear on the page. Is this a product? A how-to guide? A FAQ section? Without schema, crawlers and AI agents have to guess. They often guess wrong.

Crawl failures. Server timeouts, blocked resources, broken redirects, misconfigured robots.txt files—any of these can prevent bots from accessing your content entirely. You can't rank or get cited from pages that crawlers never successfully retrieve.

Structural ambiguity. Even when content loads correctly, poor HTML structure makes it hard for machines to identify what's important. When your key information is buried in nested divs without semantic markup, AI systems struggle to extract and summarize it accurately.

The traditional optimization workflow—keyword research, content creation, link building—assumes these foundational problems are already solved. For many sites, they aren't.

The Shared Dependency: What Both Google and LLMs Need

Despite their differences, search engines and AI assistants share core requirements for content consumption.

Clear structure. Both systems reward content organized with proper headings, logical hierarchy, and semantic HTML. Bullet points, FAQs, and direct answers to specific questions help both Google's featured snippets and AI-generated summaries.

Quality signals. Google evaluates content quality through relevance, backlinks, domain authority, and user engagement. LLMs evaluate quality through mentions across the web, factual accuracy, and whether excerpts make sense when quoted out of context. Both prioritize content that demonstrates expertise and earns trust from other sources.

Freshness. Updated content matters for both systems—though LLMs appear to prioritize recency even more heavily than traditional search algorithms. Content that hasn't been refreshed may lose citations in AI answers faster than it loses rankings in Google.

Machine readability. This is where most optimization efforts fall short. Both search engines and AI agents need content delivered in formats they can reliably parse. That means clean HTML, properly implemented schema markup, and server infrastructure that responds consistently to automated requests.

The overlap here is significant. If you optimize for one system correctly, you build foundations that serve the other. But the reverse is also true: structural problems that hurt your SEO also hurt your GEO, often more severely.

Where Traditional Optimization Stops Short

Standard SEO workflows focus on three areas: keyword targeting, content quality, and backlink acquisition.

These matter. But they all assume something that isn't always true—that your content is actually reachable and readable by the systems you're optimizing for.

The keyword fallacy. You research keywords, incorporate them into headings and body text, optimize meta descriptions. But if your page takes 8 seconds to render because of unoptimized JavaScript, or if your schema markup is malformed, or if your server returns intermittent 503 errors—those keywords never reach the algorithms evaluating them.

The content quality gap. You create comprehensive, well-researched content with original insights. Excellent. But LLMs don't just read your page—they need to extract, summarize, and cite it accurately. If your content structure doesn't support that extraction (because key points are buried in image carousels or accordion elements that don't render for bots), your quality never translates to AI visibility.

The link-building limitation. Backlinks signal authority. But authority signals only matter after crawlers can access and process your content. A page with 100 referring domains that fails to render correctly for AI agents won't earn citations from ChatGPT or Perplexity.

The missing step is what happens before all of this: ensuring that every crawler and AI agent receives complete, structured, parseable content every time they visit.

Building Machine-Readable Foundations

Fixing the bot readability problem requires work across four areas.

1. Bot-First Page Architecture

Design pages assuming the primary consumer is a machine, not a human. This doesn't mean sacrificing user experience—it means ensuring that the content humans see is the same content bots receive.

Serve critical content in initial HTML rather than relying on JavaScript hydration. Use semantic HTML elements (article, section, nav, header) that communicate document structure. Place key information—product names, prices, core value propositions—in positions that render regardless of script execution.

Search OS creates bot-first pages that present core information in machine-readable structures by default. This removes the gap between what users see and what AI agents receive.

2. Automatic Schema Generation

Schema markup should be comprehensive and accurate across your entire site—not just hand-coded on a few key pages.

Every product page needs Product schema. Every article needs Article or BlogPosting schema. FAQ sections need FAQPage schema. Local businesses need LocalBusiness schema. And all of this needs to be generated dynamically as your content changes, not maintained manually.

Search OS provides automatic schema generation (JSON-LD) that keeps structured data current across your site. When you update a product price or publish a new article, the corresponding schema updates automatically.

3. Full Crawl Visibility

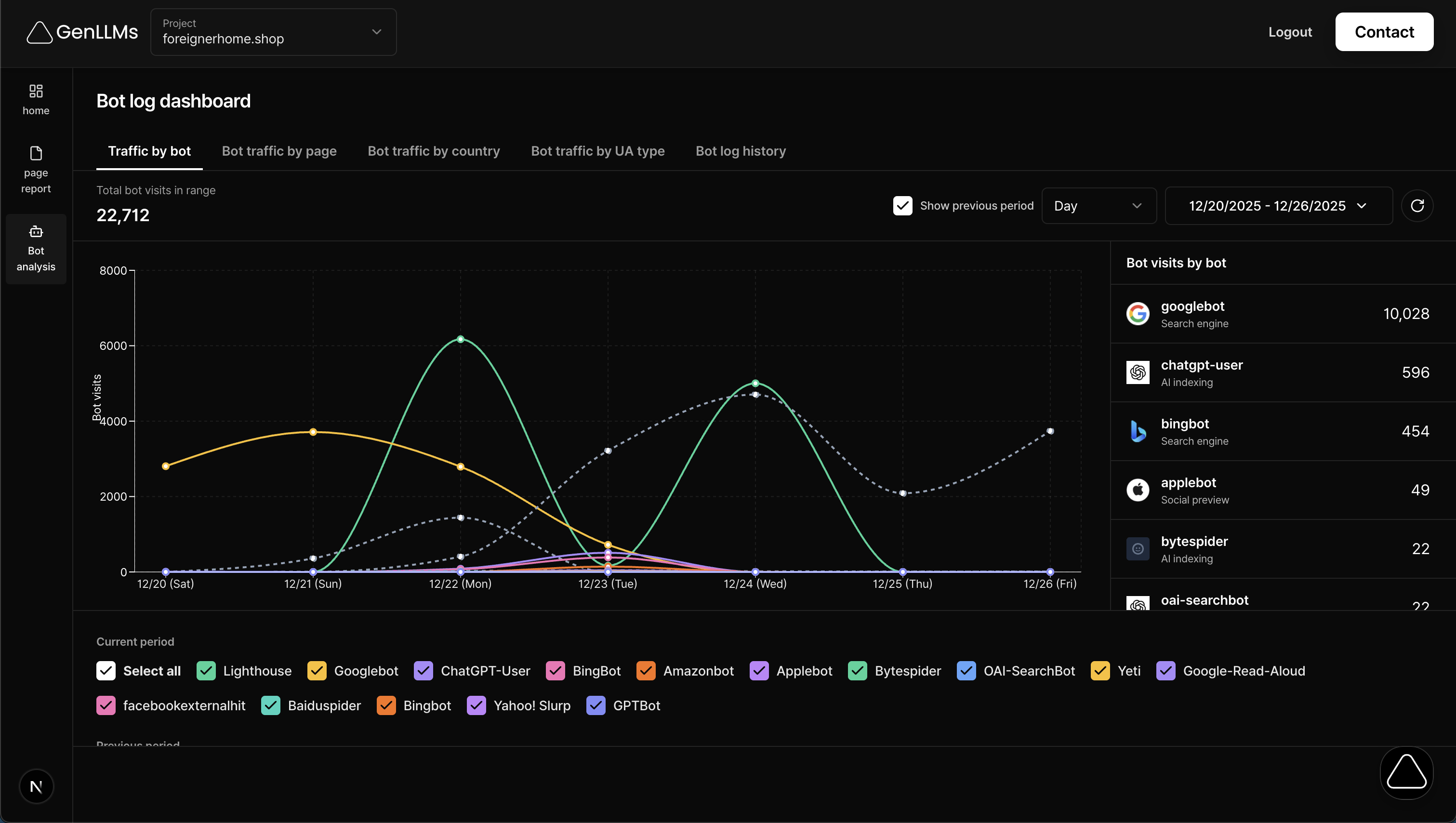

You can't fix problems you can't see. Most analytics tools show you human traffic patterns. They don't show you what bots experience.

Understanding bot behavior requires different instrumentation: which crawlers visited, when, which pages they accessed, whether those requests succeeded, how long responses took, and what content was actually served.

Search OS gives you full bot log visibility—you can inspect all bot logs to see exactly how crawlers and AI agents interact with your site. When something breaks, you know immediately. When optimization works, you have evidence.

4. Cross-Platform Consistency

Bot readability problems aren't limited to custom-built sites. They occur on Shopify stores, Wix sites, Substack publications, and every other platform.

Each platform has its own quirks in how it serves content to bots. Shopify themes may hide critical product information in JavaScript-rendered components. Wix's page builder can generate HTML that crawlers struggle to parse. Substack's newsletter pages may not include structured data that AI systems need for accurate citation.

Search OS works across Shopify, Wix, Substack, and similar platforms—applying consistent bot-first optimization regardless of where your content lives.

Measuring What Matters: Success Metrics for Both Worlds

SEO and GEO use different metrics because they track different outcomes.

Traditional SEO metrics:

- Organic traffic

- Keyword rankings

- Click-through rate

- Bounce rate

- Conversions from search

GEO metrics:

- Citation frequency in AI tools

- Brand or product mentions in AI answers

- Share of voice across AI platforms

But both categories depend on metrics that most dashboards don't show—the bot-level performance data that determines whether optimization efforts ever reach their target systems.

Crawl success rate tells you what percentage of bot requests actually receive complete content. Response time for bots shows whether your infrastructure handles automated traffic efficiently. Schema validation rates reveal whether your structured data is actually usable. AI agent coverage tracks which AI systems are visiting and successfully processing your pages.

Without these foundational metrics, you're optimizing blind. Traffic might decline because of algorithm changes—or because bots started failing to access your pages three weeks ago and you never noticed.

Search OS tracks keyword and prompt patterns, showing you not just what bots are doing, but what queries and prompts are driving AI systems to evaluate your content. This connects crawl behavior to actual search and AI visibility outcomes.

The Continuous Optimization Loop

Neither SEO nor GEO is a one-time project. Both require ongoing iteration as algorithms evolve, competitors adapt, and your own content changes.

The workflow that serves both systems follows a consistent pattern:

Diagnose. Review bot logs to identify crawl failures, slow responses, and structural problems. Understand which pages AI agents successfully process and which they struggle with.

Fix. Address technical issues—rendering problems, schema errors, server reliability—before worrying about content optimization. Foundation first.

Verify. Confirm that fixes actually changed bot behavior. Did crawl success rates improve? Are AI agents now receiving complete content? Is schema validating correctly?

Optimize. With foundations solid, focus on content quality, freshness, and authority signals that improve rankings and citation likelihood.

Monitor. Track both traditional SEO metrics and AI visibility metrics. Watch for changes that indicate new problems or opportunities.

Repeat. As you publish new content, launch new products, or update existing pages, run through the cycle again.

Search OS implements this as a continuous optimization loop, using bot-log-driven analysis to deliver core information smoothly to bots, then iterating until target pages reliably surface in AI search and traditional search results.

The results speak to the magnitude of the problem—and the opportunity in solving it. 300× faster crawling. 0 crawl failures. In the same time window, bots ingest 300× more information. 80% labor cost reduction.

These aren't incremental improvements. They're what happens when you fix the machine-readability layer that most optimization ignores entirely.

A Different Way to Think About Search Optimization

The SEO vs. GEO debate frames search optimization as two competing disciplines requiring separate strategies.

That framing misses the deeper pattern. Both systems depend on the same technical foundation: content that machines can access, parse, and understand. Get that foundation wrong, and no amount of keyword research or authority building will save you. Get it right, and you build leverage that compounds across both search engines and AI assistants.

Traditional SEO tools excel at competitive research, keyword analysis, and ranking tracking. Emerging GEO tools help you monitor AI citations and brand mentions across platforms. These remain valuable.

But before those tools can help, you need something more fundamental: confirmation that your content actually reaches the systems you're optimizing for, in formats those systems can use.

That's the problem Search OS solves. Bot-first pages. Automatic schema. Full crawl visibility. Cross-platform coverage. Continuous optimization until your content surfaces where your audience is searching—whether that's Google's results page, ChatGPT's answers, or the next AI platform you haven't heard of yet.

The future of search is fragmented across multiple systems with different algorithms and output formats. The foundation that serves all of them is the same: machine-readable content, reliably delivered, continuously optimized.

Start there.

Key Takeaways

- AI answers are mainstream: 13.14% of queries now trigger AI Overviews, and 80% of users answer 40% of their queries without clicking any link.

- SEO and GEO share a dependency: Both require content that machines can access and parse. Missing this layer undermines all other optimization efforts.

- Bot readability fails silently: JavaScript rendering issues, schema problems, and crawl failures often go undetected in standard analytics.

- Each AI platform behaves differently: ChatGPT, Perplexity, Google AI Overviews, and Gemini each have distinct citation patterns and content preferences.

- Foundation before optimization: Fix technical accessibility before investing in content quality and authority building.

- Measurement requires bot-level data: Traditional SEO metrics don't show whether bots successfully receive your content.

- Continuous iteration serves both worlds: The diagnosis → fix → verify → optimize → monitor cycle applies to SEO and GEO equally.

See What Bots Actually See

Your analytics show human traffic. Search OS shows you what crawlers and AI agents experience—every request, every failure, every opportunity. Start your free audit and find out if your content is actually reaching the algorithms you're optimizing for.