Ecommerce Crawlability: The Technical Gap Shopify and WordPress Don't Fix

Shopify or WordPress? The platform debate misses what actually determines bot discoverability. Learn the technical infrastructure layer that drives ecommerce visibility.

The Technical Infrastructure Gap That Decides Whether Bots Find Your Store

The ecommerce platform debate usually goes like this: Shopify for simplicity, WordPress for control. Thousands of comparison guides rehash the same decision tree—budget, coding skills, customization needs.

None of them address the actual problem.

Your platform handles what humans see. It manages themes, checkout flows, and inventory. But it has almost no opinion on what search engine crawlers and AI agents see when they visit your store. That gap—between human-facing storefronts and bot-readable infrastructure—is where visibility lives or dies.

Most ecommerce stores lose traffic not because they chose the wrong platform, but because they never addressed the layer between their platform and the bots trying to index their products. This article explains what that layer is, why platforms don't solve it, and how to fix it regardless of whether you run Shopify, WordPress, or anything else.

The Discovery Problem Nobody Talks About

Here's a scenario that plays out constantly: A store owner spends weeks perfecting product descriptions, optimizing images, and configuring shipping rules. The storefront looks great. Customers who find it convert well.

But organic traffic stays flat.

The store owner assumes they need more backlinks, better keywords, or different content. They might even switch platforms, thinking Shopify was limiting their SEO or WordPress was too complicated to configure properly.

The actual issue? Crawlers never properly indexed the product catalog in the first place. The bots viThe Discovery Problem Nobody Talks About

Here's a scenario that plays out constantly: A store owner spends weeks perfecting product descriptions, optimizing images, and configuring shipping rules. The storefront looks great. Customers who find it convert well.

But organic traffic stays flat.

The store owner assumes they need more backlinks, better keywords, or different content. They might even switch platforms, thinking Shopify was limiting their SEO or WordPress was too complicated to configure properly.

The actual issue? Crawlers never properly indexed the product catalog in the first place. The bots visited, encountered rendering delays or malformed data structures, and moved on. The store exists in a sort of search engine limbo—technically indexed, but practically invisible for commercial queries.

This happens because ecommerce platforms optimize for human visitors, not machine visitors. They prioritize visual design, conversion psychology, and checkout friction reduction. The technical infrastructure that makes content legible to crawlers gets treated as an afterthought, if it's addressed at all.

What Platform Choice Actually Determines

Platform comparisons focus on operational concerns: hosting management, theme availability, plugin ecosystems, pricing. These matter for running a store. They barely matter for search visibility.

Consider the hosting question. Shopify provides fully managed hosting, which eliminates server maintenance but limits access to server logs and configuration files. WordPress requires selecting and paying for separate hosting, which adds complexity but provides more control over server-side settings.

From a bot discoverability perspective, neither approach inherently wins. Managed hosting can deliver fast page loads but restricts your ability to diagnose crawl issues. Self-managed hosting allows deep configuration but introduces more variables that can break.

The same pattern repeats across platform features:

Theme systems. Shopify offers 140+ themes designed for quick launches. WordPress provides thousands of themes with varying quality. Both can produce stores that render beautifully for humans while generating markup that confuses crawlers.

Plugin and app ecosystems. WordPress offers over 1,000 ecommerce-related plugins; Shopify's App Store covers similar territory. Most focus on human-facing features—upsells, reviews, loyalty programs. Few address structured data generation or crawl optimization.

SEO tools. Both platforms support on-page SEO basics: custom title tags, meta descriptions, alt text, URL customization . These help, but they're table stakes. They don't address whether bots can efficiently parse your product catalog or understand the relationships between your pages.

The platform handles commerce operations. It doesn't handle bot-first infrastructure.

The Server Access Gap

One legitimate technical difference between managed and self-hosted platforms: access to server logs and configuration files.

WordPress users who manage their own hosting can access server logs directly, modify caching rules via configuration files, and implement custom redirect logic. This visibility lets technically skilled operators diagnose crawl issues at the server level.

Shopify's managed architecture trades this access for simplicity. You get a working store without touching servers, but you can't inspect raw server logs to see exactly how crawlers interacted with your pages .

Neither situation automatically produces better crawl outcomes. Server access means nothing if you don't know what to look for. Managed hosting can still deliver excellent crawlability if the platform handles bot interactions well.

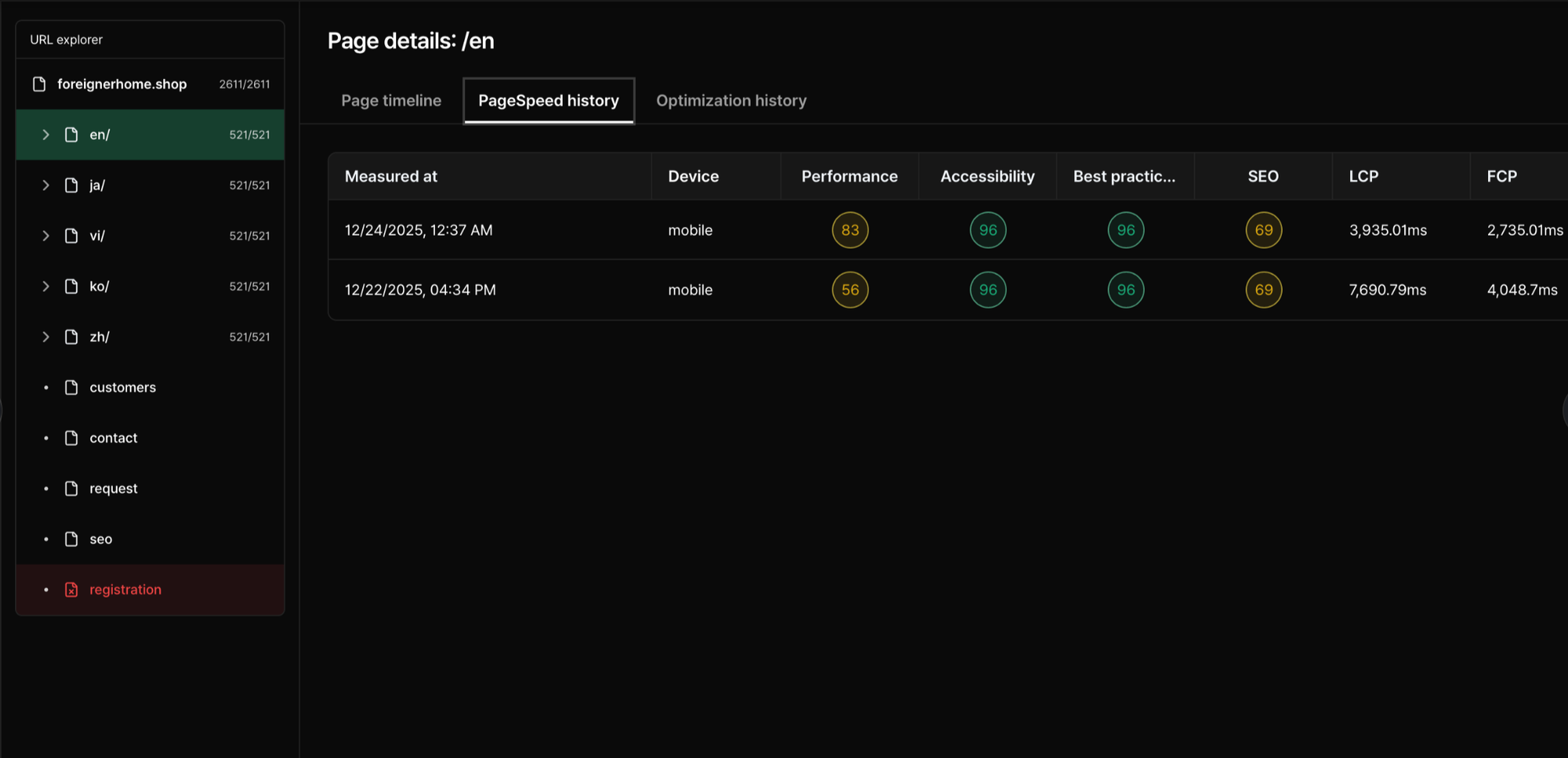

What both approaches lack: purpose-built bot log visibility that shows you exactly what crawlers encountered, which pages they prioritized, where they hit errors, and how efficiently they consumed your content.

This is where specialized infrastructure becomes essential. When you can inspect all bot logs—not just server access logs, but actual crawler behavior data—you can diagnose problems that neither platform surfaces by default.

Search OS provides full bot log visibility across any platform. Whether you're on Shopify, WordPress, Wix, or Substack, you get the same diagnostic capability: seeing exactly what bots see when they visit your store.

Why Product Pages Fail the Bot Test

Ecommerce sites present unique crawlability challenges that content sites don't face.

A blog post is relatively simple: one URL, one block of content, minimal dynamic elements. Crawlers know how to handle this format because it's been standard since the early web.

Product pages are different. They contain structured information—prices, availability, variants, reviews, specifications—that needs machine-readable formatting to be properly understood. They often load content dynamically through JavaScript. They exist within complex category hierarchies that crawlers must navigate efficiently.

Most product pages fail the bot test in predictable ways:

Missing or malformed structured data. Product information exists in human-readable format but lacks the JSON-LD markup that tells crawlers "this is a product with these specific attributes." Without structured data, crawlers must guess at meaning from context—and they guess wrong constantly.

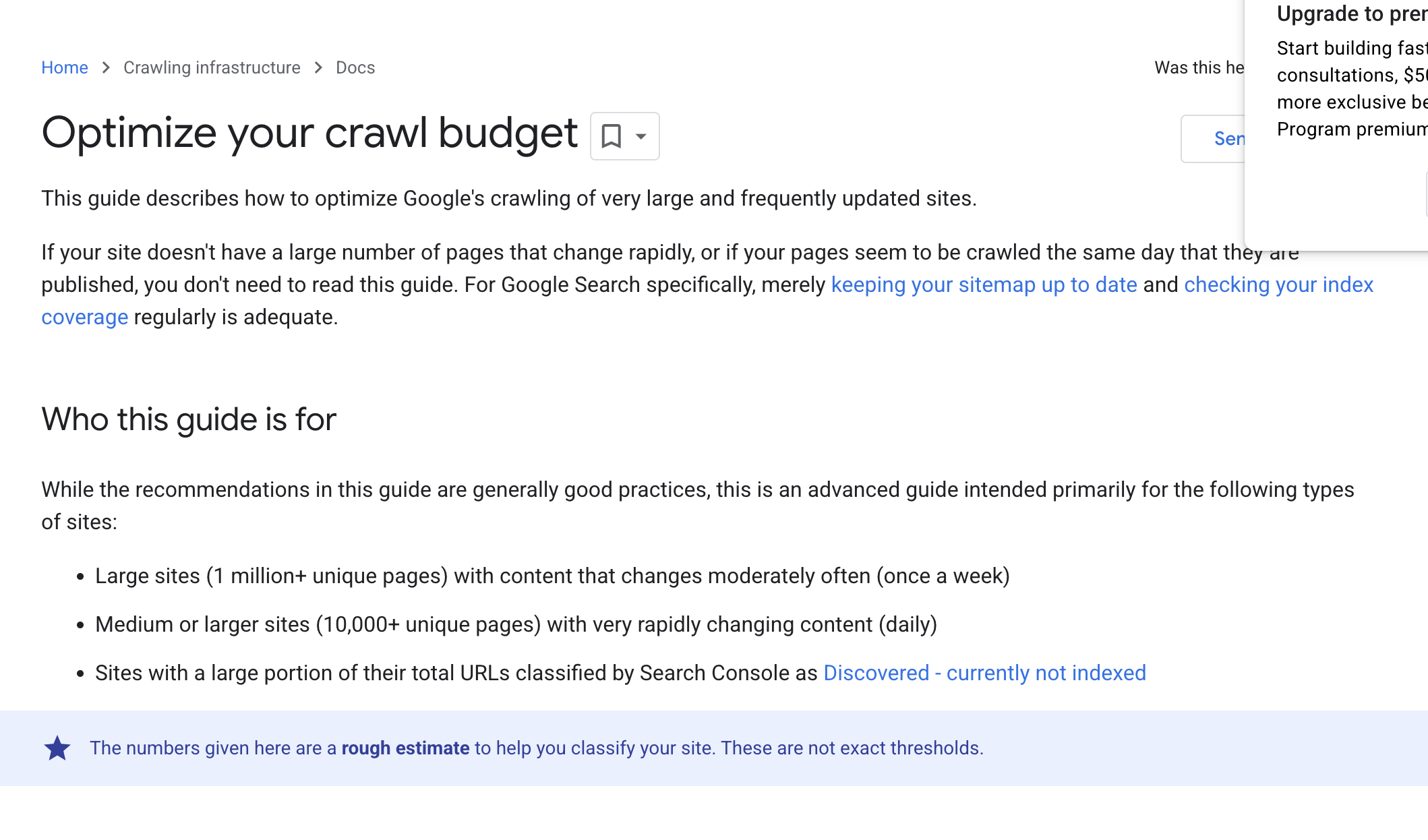

Inefficient crawl paths. Crawlers have limited budgets for how many pages they'll process on any domain. If your site architecture forces them through low-value category pages before reaching products, they may exhaust their budget before indexing your actual inventory.

Duplicate or near-duplicate content. Product variants, filtered views, and pagination often create multiple URLs with similar content. Without proper canonical signals, crawlers waste budget indexing redundant pages.

Render-dependent content. If product details load via JavaScript after initial page load, crawlers may index the page before that content appears. They see an empty shell instead of your carefully written descriptions.

Fixing these issues requires infrastructure-level changes, not platform switches.

Schema Markup as Universal Bot Language

Structured data—specifically JSON-LD schema markup—functions as a universal translation layer between your product information and crawler understanding.

When properly implemented, schema markup tells crawlers exactly what each data point means. Price isn't just "a number near a buy button"—it's explicitly labeled as the offer price for a specific product variant, in a specific currency, valid until a specific date.

This matters for two reasons.

First, rich results. Proper product schema enables enhanced search listings with prices, ratings, and availability displayed directly in results. These listings capture attention and clicks that plain text results miss.

Second, AI interpretation. Large language models and AI search systems increasingly rely on structured data to understand web content. Pages with clear schema markup communicate their meaning unambiguously. Pages without it force AI systems to infer meaning—and inference introduces errors.

The problem: schema markup is tedious to implement manually and easy to break accidentally. A theme update, plugin conflict, or content change can silently invalidate your structured data. Most store owners never check whether their schema remains valid after changes.

Search OS handles automatic schema generation for product pages, category pages, and supporting content. The system produces valid JSON-LD markup that stays synchronized with your actual product data, across Shopify, WordPress, Wix, Substack, and similar platforms.

This isn't just convenience. Automatic generation eliminates the silent failures that plague manual schema implementations.

Making Your Store AI-Readable

Traditional crawlers from search engines have well-documented behaviors. You can study Google's guidelines, observe crawl patterns in server logs, and optimize accordingly.

AI agents operate differently. They consume content to answer questions, not to build indexes. They may visit once to understand your offerings, then cite that understanding in responses to user queries—or not, depending on how well they parsed your content.

The stakes here keep rising. AI-assisted search increasingly shapes how consumers discover products. If AI systems can't efficiently extract and understand your product information, you don't appear in their responses.

What makes content AI-readable?

Clear information architecture. AI agents don't navigate through clicking. They process page content directly. If your product information requires multiple page loads or complex interactions to access, AI agents may miss it entirely.

Consistent formatting. Structured, predictable content layouts help AI systems identify patterns. When your product pages follow consistent templates, AI agents learn your site's logic and extract information more reliably.

Explicit relationships. Category hierarchies, product comparisons, and attribute relationships should be encoded in markup, not just implied by design. AI systems handle explicit relationships better than implied ones.

Search OS creates bot-first pages optimized for AI agent consumption. These pages present your core product information in formats designed for machine reading—structured, explicit, and consistently formatted.

The result: In the same time window, bots ingest 300× more information.

The Continuous Optimization Loop

Bot optimization isn't a one-time project. Crawlers change their behavior. AI agents evolve their processing methods. Your product catalog updates. Competitors shift. Search algorithms adjust.

Effective bot infrastructure requires continuous monitoring and adjustment.

The optimization loop works like this:

Monitor. Track what crawlers actually do on your site. Which pages do they prioritize? Where do they encounter errors? How efficiently do they process your content?

Diagnose. When crawl patterns deviate from expectations, identify the cause. Did a site change break structured data? Did crawler behavior shift? Did new competitors capture attention?

Adjust. Make targeted changes based on diagnostic findings. Fix specific issues rather than guessing at solutions.

Verify. Confirm that changes produced intended effects. Monitor subsequent crawl behavior to ensure fixes held.

This loop never ends, but it compounds. Each iteration builds better baseline performance. Problems get caught earlier. Fixes become more precise.

Search OS runs this loop automatically. The system monitors bot behavior across your site, diagnoses issues as they emerge, implements fixes, and verifies outcomes—continuously, without requiring manual intervention for routine optimization.

The system works until target pages reliably surface in search results and AI responses. Not through ranking guarantees (nobody can promise specific positions), but through systematic infrastructure optimization that removes barriers to discovery.

Platform-Specific Implementation Notes

While bot infrastructure matters more than platform choice, each platform has specific considerations worth addressing.

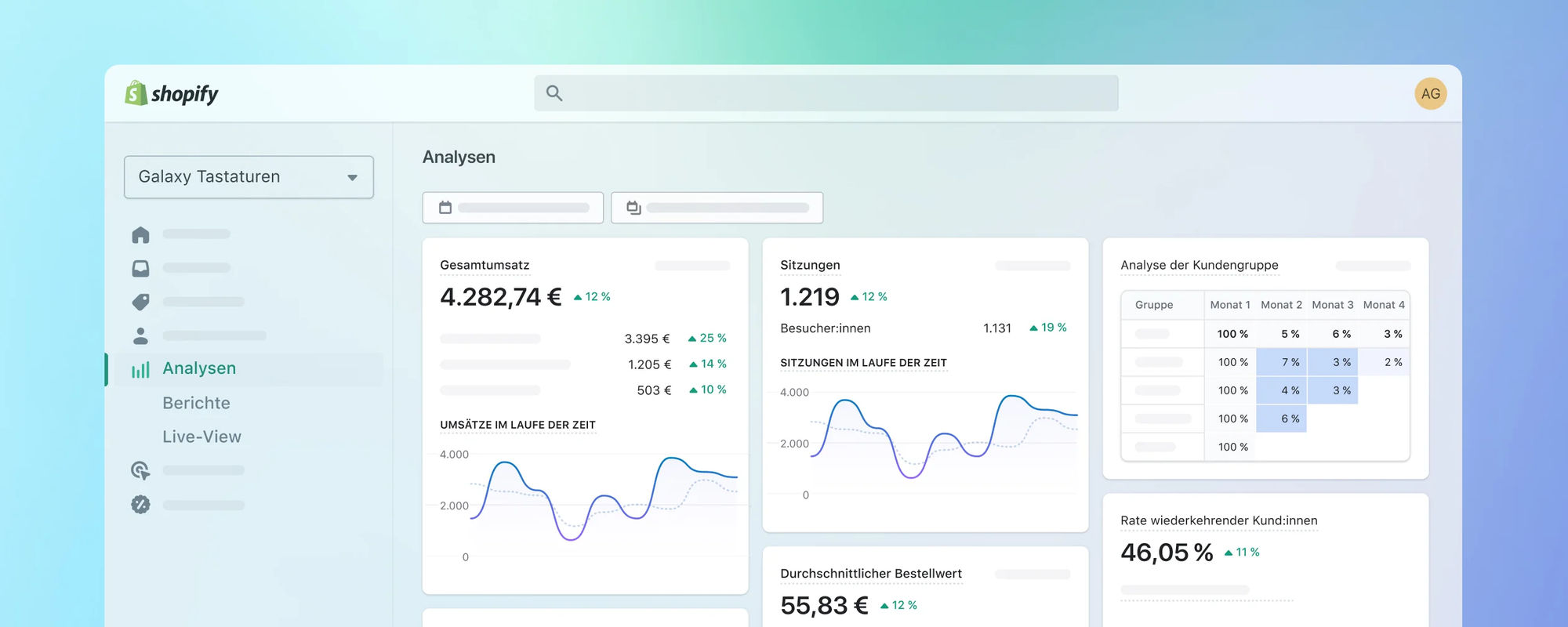

Shopify implementations. Shopify's managed architecture means you're working within the platform's constraints. You can't modify server configurations, but you can implement client-side schema, optimize content structure, and ensure product data exports cleanly. Shopify's app ecosystem includes SEO tools, but most focus on on-page optimization rather than bot infrastructure. Search OS integrates with Shopify stores to provide the infrastructure layer the platform doesn't include natively.

WordPress implementations. WordPress offers more configuration flexibility but also more failure modes. Plugin conflicts can break schema markup. Theme updates can invalidate structured data. Hosting misconfigurations can block crawlers entirely. The platform's flexibility becomes a liability without systematic monitoring. WordPress users benefit from Search OS's cross-platform integration, which provides consistent bot optimization regardless of the specific plugin and hosting stack.

Other platforms. Wix, Substack, and similar platforms each have their own constraints and capabilities. The underlying principle holds: the platform handles human-facing operations while bot infrastructure requires dedicated attention. Search OS works across these platforms, providing the same bot-first optimization regardless of your specific technology stack.

The key insight: platform choice constrains some tactical decisions but doesn't determine strategic outcomes. Bot visibility depends on infrastructure that sits above platform-level concerns.

What This Means for Your Store

The Shopify vs. WordPress debate will continue. Store owners will weigh setup convenience against customization flexibility, compare pricing models, and assess plugin ecosystems.

These factors matter for daily operations. They don't determine whether bots can find and understand your products.

If you're evaluating platforms, consider operational fit first. Choose the system that matches your team's skills and your business requirements. Don't expect platform choice to solve discoverability problems—that's not what platforms do.

If you're already running a store and struggling with organic visibility, stop looking for platform-level solutions. The issue is almost certainly in the infrastructure layer: missing schema, inefficient crawl paths, bot accessibility gaps, or silent technical failures that your platform doesn't surface.

Search OS addresses this layer directly:

- 300× faster crawling. Optimized infrastructure lets crawlers process your product catalog efficiently.

- 0 crawl failures. Systematic monitoring catches and fixes issues before they block discovery.

- 80% labor cost reduction. Automated optimization eliminates manual SEO maintenance tasks.

The platform runs your store. The infrastructure determines whether anyone finds it.

Getting Started

Three steps to improve bot discoverability regardless of your current platform:

Audit your structured data. Check whether your product pages include valid JSON-LD schema markup. Google's Rich Results Test can validate individual pages; systematic auditing requires examining your entire catalog.

Review crawl behavior. If you have access to server logs, examine how crawlers interact with your site. Look for error rates, crawl frequency patterns, and which pages receive attention. If you're on managed hosting without log access, this diagnostic gap is exactly what Search OS fills.

Map your information architecture. Trace the path from your homepage to individual products. Count how many clicks each product requires. Identify pages that exist solely for human navigation but waste crawler budget. Simplify where possible.

These steps reveal the current state. Fixing what you find—and maintaining fixes over time—requires infrastructure purpose-built for bot optimization.

Search OS provides that infrastructure. Keyword and prompt prediction identifies what queries your products should appear for. Bot-log-driven analysis diagnoses what's blocking discovery. Automatic schema generation ensures crawlers understand your content. Continuous optimization loops keep everything working as conditions change.

The platform handles commerce. The infrastructure handles discovery. Both matter.